It’s happened to me, it’s happened to you, it’s happened more than one million times and it will still happen in the future. You run out of disk space or a disk fails. Nowadays you are using ZFS, and instead of having a fancy RAIDZ, because you still don’t need it, you are using a mirror configuration for your data. And one disk goes off. Or better yet, you’ve bought new bigger disks for that box. Here is how to replace a disk on a ZFS mirror pool, of course on FreeBSD. This works either for a disk replacement for an exact same sized one or a bigger one. If in doubt at any moment the FreeBSD handbook can be checked here.

Disclaimer. Data loss is a risk inherent to these operations. Backups, or extra copies, are necessary to avoid such losses. I shall not be liable for the process described here, follow it at your own discretion.

This ‘how to’ assumes the reader has some knowledge on how FreeBSD identifies devices and has basic understanding on how the OS and ZFS work.

This process contemplates a box containing a pair of disks, both ZFS formated, and setup as a mirror, following the process described here. A different disk arrangement could be perfectly applicable, but only if a mirror setup is in place. For example, a mirror of two vdevs, each vdev containg a pair of disks, with a total of four disks in the system. The process also asumes one of those disks has been identified as problematic, completely failed or just working fine, but aimed to be substituted for a new one (either same size or bigger).

If the box is up and running, we should shut it down if disks are not hot swappable. After having done that, we can substitute one disk for another fresh one and start up the box again.

If you find the articles in Adminbyaccident.com useful to you, please consider making a donation.

Use this link to get $200 credit at DigitalOcean and support Adminbyaccident.com costs.

Get $100 credit for free at Vultr using this link and support Adminbyaccident.com costs.

Mind Vultr supports FreeBSD on their VPS offer.

1.- Identify the ZFS pool status.

We log in the box and we check the pool status.

albert@BSD-S:~ % zpool status

pool: zroot

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-4J

scan: resilvered 971M in 0 days 00:00:11 with 0 errors on Fri Jan 8 23:52:42 2021

config:

NAME STATE READ WRITE CKSUM

zroot DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

4905017922278967207 FAULTED 0 0 0 was /dev/ada0p3

ada0p3 ONLINE 0 0 0

errors: No known data errors

albert@BSD-S:~ %

As you can see the pool is degraded since one disk in the mirror is missing. This could be because we’ve changed one of the disks for a new one, or one of the two has issues. You surely have seen this before swapping the disks if one of them is failing. If you are just swapping old disks with new ones your pool status was probably ok.

We now look for the new drive we have put in place of the old one.

albert@BSD-S:~ % ll /dev/ada*

crw-r----- 1 root operator 0x55 Jan 8 23:47 /dev/ada0

crw-r----- 1 root operator 0x57 Jan 8 23:47 /dev/ada0p1

crw-r----- 1 root operator 0x58 Jan 8 23:47 /dev/ada0p2

crw-r----- 1 root operator 0x59 Jan 8 23:47 /dev/ada0p3

crw-r----- 1 root operator 0x56 Jan 8 23:47 /dev/ada1

albert@BSD-S:~ %

The ada0 device is the already in use one, containing three partitions. The ada1 device, as it can be seen, isn’t partitioned at all. It needs to be.

2.- Format and partition the new added disk.

Our pool had issues or we just want to expand its capacity by adding bigger disks. The new replacement is unformatted and we need to give it some ZFS.

The original mirror contained two disks of 32GB capacity each. One has been substituted by a larger one capable to contain 64GB (the same applies for same sized disks). As we can see below ada0 is the old disk, capable of 32GB, and ada1 is the newly added one with 64GB.

albert@BSD-S:~ % geom disk list

Geom name: ada0

Providers:

1. Name: ada0

Mediasize: 34359738368 (32G)

Sectorsize: 512

Mode: r2w2e3

descr: VBOX HARDDISK

ident: VBb6219070-bce6fc56

rotationrate: unknown

fwsectors: 63

fwheads: 16

Geom name: ada1

Providers:

1. Name: ada1

Mediasize: 68719476736 (64G)

Sectorsize: 512

Mode: r0w0e0

descr: VBOX HARDDISK

ident: VB60d99b44-f8cd9e04

rotationrate: unknown

fwsectors: 63

fwheads: 16

albert@BSD-S:~ %

Now we are sure which disks is each, so we don’t get confused on which to work on. We can proceed with the partition and formatting.

‘ada0’ is the original disk where data is stored.

‘ada1’ is the new disk we have put into the system.

Because it’s a mirror of a booting disk we will add the necessary partitions as we previosly had. Later on, when we attach this new device to the pool, we will add the bootcode so if the old ada0 disk fails, this new one can take on and boot the system.

We create the partition table on the new disk ada1.

albert@BSD-S:~ % sudo gpart create -s gpt /dev/ada1

ada1 created

albert@BSD-S:~ %

We make the necessary partitions on the disk.

albert@BSD-S:~ % sudo gpart add -t freebsd-boot -s 512k /dev/ada1

ada1p1 added

albert@BSD-S:~ %

albert@BSD-S:~ % sudo gpart add -t freebsd-swap -a 4K -s 2048M /dev/ada1

ada1p2 added

albert@BSD-S:~ %

albert@BSD-S:~ % sudo gpart add -t freebsd-zfs -a 4K /dev/ada1

ada1p3 added

albert@BSD-S:~ %

Now that the new disk has been partitioned and formated we can add it to the pool.

3.- Add the new disk to the pool.

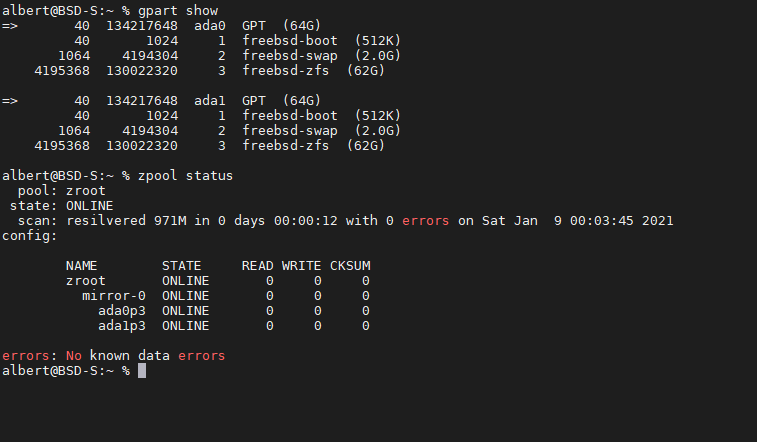

We have partitioned and formatted the new disk and we want to make sure this is the case and everything is on place. With the ‘gpart’ command we can check those partitions are existing.

albert@BSD-S:~ % gpart show

=> 40 67108784 ada0 GPT (32G)

40 1024 1 freebsd-boot (512K)

1064 984 - free - (492K)

2048 4194304 2 freebsd-swap (2.0G)

4196352 62910464 3 freebsd-zfs (30G)

67106816 2008 - free - (1.0M)

=> 40 134217648 ada1 GPT (64G)

40 1024 1 freebsd-boot (512K)

1064 4194304 2 freebsd-swap (2.0G)

4195368 130022320 3 freebsd-zfs (62G)

albert@BSD-S:~ %

We can now add this disk (ada1) to the pool.

albert@BSD-S:~ % sudo zpool attach zroot /dev/ada0p3 /dev/ada1p3

Make sure to wait until resilver is done before rebooting.

If you boot from pool 'zroot', you may need to update

boot code on newly attached disk '/dev/ada1p3'.

Assuming you use GPT partitioning and 'da0' is your new boot disk

you may use the following command:

gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 da0

albert@BSD-S:~ %

Let’s add the boot code to the new disk so in case the other one (the old one) fails this system can still boot.

albert@BSD-S:~ % sudo gpart bootcode -b /boot/pmbr -p /boot/gptzfsboot -i 1 ada1

partcode written to ada1p1

bootcode written to ada1

albert@BSD-S:~ %

Important. Mind the pool is now being resilvered, so information contained in ada0p3 is being added (mirrored) to ada1p3. Once the process is finished we can reboot or shutdown this system (if needed because disks are not hot swappable). Simply using the ‘zpool status’ command we can figure out if the disks are still being worked on or if the pool is now stable and fully working. A resilvering process will appear when launching that command. Wait until it ends. It can take hours, so be patient.

So far we have changed the old disk, formated the new one and added it to the pool. We have also made it ready to be bootable in case the original remaining one fails and this new one can take over. There is one step left though, removing the old drive from the pool.

4.- Remove the original disk from the pool.

Although we have replaced the original disk, formatted a new one and added it to the pool and left it resilver, the pool is still marked as ‘DEGRADED’.

albert@BSD-S:~ % zpool status

pool: zroot

state: DEGRADED

status: One or more devices could not be used because the label is missing or

invalid. Sufficient replicas exist for the pool to continue

functioning in a degraded state.

action: Replace the device using 'zpool replace'.

see: http://illumos.org/msg/ZFS-8000-4J

scan: resilvered 971M in 0 days 00:00:12 with 0 errors on Sat Jan 9 00:03:45 2021

config:

NAME STATE READ WRITE CKSUM

zroot DEGRADED 0 0 0

mirror-0 DEGRADED 0 0 0

4905017922278967207 FAULTED 0 0 0 was /dev/ada0p3

ada0p3 ONLINE 0 0 0

ada1p3 ONLINE 0 0 0

errors: No known data errors

albert@BSD-S:~ %

Why? The pool still waits to have its original disk, labeled 4905017922278967207 on it. And that disk isn’t present anymore. Obviously the ZFS pool complains about it. Let’s fix this.

albert@BSD-S:~ % sudo zpool detach zroot 4905017922278967207

albert@BSD-S:~ %

Now we check if the change has taken good effect on the pool.

albert@BSD-S:~ % zpool status

pool: zroot

state: ONLINE

scan: resilvered 971M in 0 days 00:00:12 with 0 errors on Sat Jan 9 00:03:45 2021

config:

NAME STATE READ WRITE CKSUM

zroot ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

ada0p3 ONLINE 0 0 0

ada1p3 ONLINE 0 0 0

errors: No known data errors

albert@BSD-S:~ %

It has. The pool is now in the ‘ONLINE’ status, with no issues, the disk has been replaced and filled with the data. Mind the ‘resilvered’ part where we can see the process has ended satisfactory. Plus the old disk isn’t present, not just physically but in the pool register, and it’s now consistent.

Note. The ‘replace’ option hasn’t been used in this operation, on steps 3 and 4, and ‘attach’ or ‘detach’ have been used instead. This is because the ‘replace’ command would have copied existing data from the original device to the new one. If the original drive had serious issues, this could have brought problems and the data contained in it could possibly not been correctly copied to the new device. The use of the ‘replace’ option is recommended for raidz configurations, not mirrors.

Important. At this point, everything would be now fine if the substituted disk is equal in size and no additional steps are required. So steps 5 and 6 can be skipped. Reading them won’t hurt you though.

If you, like me, want to upgrade disks to a bigger size, follow on. The same additional steps would be necessary in case you wanted to also substitute the remaining disk with a same size one for extra assurance it doesn’t fail in a few days time.

5.- Replace the ‘old’ remaining disk/s (optional).

So far we’ve changed the failed disk (or simply replaced it with a newer one), added a new disk, partitioned and formatted it for ZFS use, attached it to the pool, checked the resilver process has finished and detach the old disk from the pool.

If a mirror has a failed disk, one substitutes it as seen above and off one goes. However, if the disks are similarly aged, and specially if they are from the same manufacturer and model, there’s a good chance the remaining one/s fail soon. Maybe ‘soon’ is not the right word but not that far in time may better express the idea of what is the future of that remaining disk. This is specially significant on systems that are quite busy. Because of this replacing the remaining disk with a newer one can be a good idea.

This step is basically repeating the ones above in the same order.

-

Take the box down (if disks are not hot-swappable) and replace the disk.

-

Check the pool status.

-

Partition and format the newly added disk.

-

Remove the original disk from the pool.

Note. If necessary change the new disk of slot if the box doesn’t boot. Depending on your hardware the system will look for a drive on the first slot. If empty or containing an empty disk the box won’t boot.

6.- Grow the pool size (optional – only for bigger disks replacement).

If we have replaced the pool’s disks with bigger ones, and if only we’ve done this, we need to add a step in this disk replacement operation. The pool size available to use is still contemplating the old and smaller size.

Important. Mind that if disks size is mismatched, the mirror’s size will be limited to the smaller disk capacity.

Let’s look at the new disks size.

albert@BSD-S:~ % geom disk list

Geom name: ada0

Providers:

1. Name: ada0

Mediasize: 68719476736 (64G)

Sectorsize: 512

Mode: r2w2e3

descr: VBOX HARDDISK

ident: VB60d99b44-f8cd9e04

rotationrate: unknown

fwsectors: 63

fwheads: 16

Geom name: ada1

Providers:

1. Name: ada1

Mediasize: 68719476736 (64G)

Sectorsize: 512

Mode: r1w1e2

descr: VBOX HARDDISK

ident: VBcc4dee8e-2bc371e8

rotationrate: unknown

fwsectors: 63

fwheads: 16

albert@BSD-S:~ %

And now let’s look at the pool’s available size.

albert@BSD-S:~ % zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 29.5G 971M 28.6G - 32G 0% 3% 1.00x ONLINE -

albert@BSD-S:~ %

Surprised? The pool is still contemplating 32GB disks with available space for 29.5 GB of data.

We need to expand the pool’s size. We’ll do that by expanding the capacity on each device.

albert@BSD-S:~ % sudo zpool online -e zroot ada0p3

albert@BSD-S:~ % sudo zpool online -e zroot ada1p3

We check back again the pool’s capacity.

albert@BSD-S:~ % zpool list

NAME SIZE ALLOC FREE CKPOINT EXPANDSZ FRAG CAP DEDUP HEALTH ALTROOT

zroot 61.5G 972M 60.6G - - 0% 1% 1.00x ONLINE -

albert@BSD-S:~ %

As it can be read it’s now much bigger and the new disks capacity can be leveraged.

So far so good. If you’ve followed all the steps you have:

-

Replaced a failed or working disk for a new one (due to disk issues or willing to expand the pool’s capacity).

-

Partitioned and formated the newly added disk.

-

Attached the new disk to the mirror pool.

-

Detached the old disk.

-

Replaced the remaining old disk with a new one.

-

Repeteated the partioning for this second new disk, attachment of it to the pool, detachment of the remaining old.

-

Expanded the pool’s capacity to fully leverage the newly installed disks.

At this point the operation of replacing a disk on a ZFS mirror pool concludes. The mirror has now new disks, bigger ones if you’ve chosen to do so, and the data can be safely used and stored for a longer time.

Conclusion.

Replacing disks on any system has always been a critical operation. One that needs planning ahead, have good backups and sometimes even fingers crossed, specially when running dubious quality hardware. ZFS has made a few things more comfortable to the mind but still one must take good care of these operations.

Also notice you may find yourself with a desktop box running as a home server, or even being ‘the company’s server, so things are not ideal. For example not being able to place the new disk while the old one is still on the box because of space restrictions. Not all applications of ZFS are on good quality, dedicated for storage, hardware. This means, you may even have to swap the disk on the box physically into another slot for the system to boot, and then apply many of the above steps. I’ve done this on hardware a couple of times but always trained the exercise on virtual environments previously.

Choose good quality hardware or at least know what you have and in what condition it is. Be also aware of the difference on drives quality and capabilities so you don’t end up buying SMR disks and find yourself with eternal resilver times or bottlenecks on writes if you have a high rate of such operations specially with small sized files. You almost always want CMR ones.

If you find the articles in Adminbyaccident.com useful to you, please consider making a donation.

Use this link to get $200 credit at DigitalOcean and support Adminbyaccident.com costs.

Get $100 credit for free at Vultr using this link and support Adminbyaccident.com costs.

Mind Vultr supports FreeBSD on their VPS offer.